Reading time:

5 min

Redefining Document Intelligence: Moving Beyond RAG with Long-Context AI and Embedded Citations

Modern LLMs revolutionize document analysis - enabling holistic, auditable insights that surpass traditional RAG and chunking approaches.

Article written by

Sam Lansley

Advances in artificial intelligence are transforming document analysis in high-compliance sectors. For years, Retrieval-Augmented Generation (RAG) and vectorization have been the gold standard for surfacing relevant information from vast document sets. However, the rapid evolution of large language models (LLMs) - specifically those supporting dramatically expanded context windows - presents a paradigm shift. It's now possible to evaluate entire contracts, regulatory reports, or legal case bundles as unified entities, driving unprecedented accuracy and transparency.

Limitations of Classic RAG and Vectorization

Traditional RAG workflows rely on splitting documents into small “chunks,” converting these into embeddings, and then storing them in a vector database. When queried, the system retrieves what it determines are the most relevant fragments for LLM response. While powerful for simple search or lookup tasks, chunked RAG comes with a significant drawback: it breaks document continuity. This issue is especially pronounced in domains where multi-hop reasoning across sections is essential for accurate analysis.

Consider a scenario such as legal document review: evidence or definitions distributed across various sections must be interpreted together. A chunked RAG approach may isolate one fragment and lose critical interdependencies, undermining the reliability of assessment. Fragmentation became commonplace due to early LLMs’ restricted context windows, not due to inherent technical superiority.

The Rise of Long-Context LLMs

Today’s leading LLMs can process up to 1M tokens in a single session - encompassing entire case files or lengthy contracts as single documents. This leap means models now reason holistically, considering the full breadth of contextual nuance. Recent academic research suggests that as context windows grow, traditional RAG and vectorization lose their edge, with long-context models outperforming chunk-based retrieval, especially for complex question-answering and document comprehension tasks.

Embedded Citations: The Modern Approach

The new best practice is to ingest full documents or substantial sections without splitting them into disjointed database entries. Instead, by injecting unique identifiers throughout the document - each portion of the document remains accessible for relevance. This means every answer generated by the model can directly reference its exact source material, supporting verifiable, in-context retrieval.

Such a method preserves comprehensive document context while ensuring that every claim is auditably traced to its origins. Like academic referencing, embedded citations provide transparency and guarantee that analysis is grounded in clear, trackable evidence – a vital requirement for any audit-heavy or compliance-driven organisation.

Maximizing Auditability, Context, and Trust

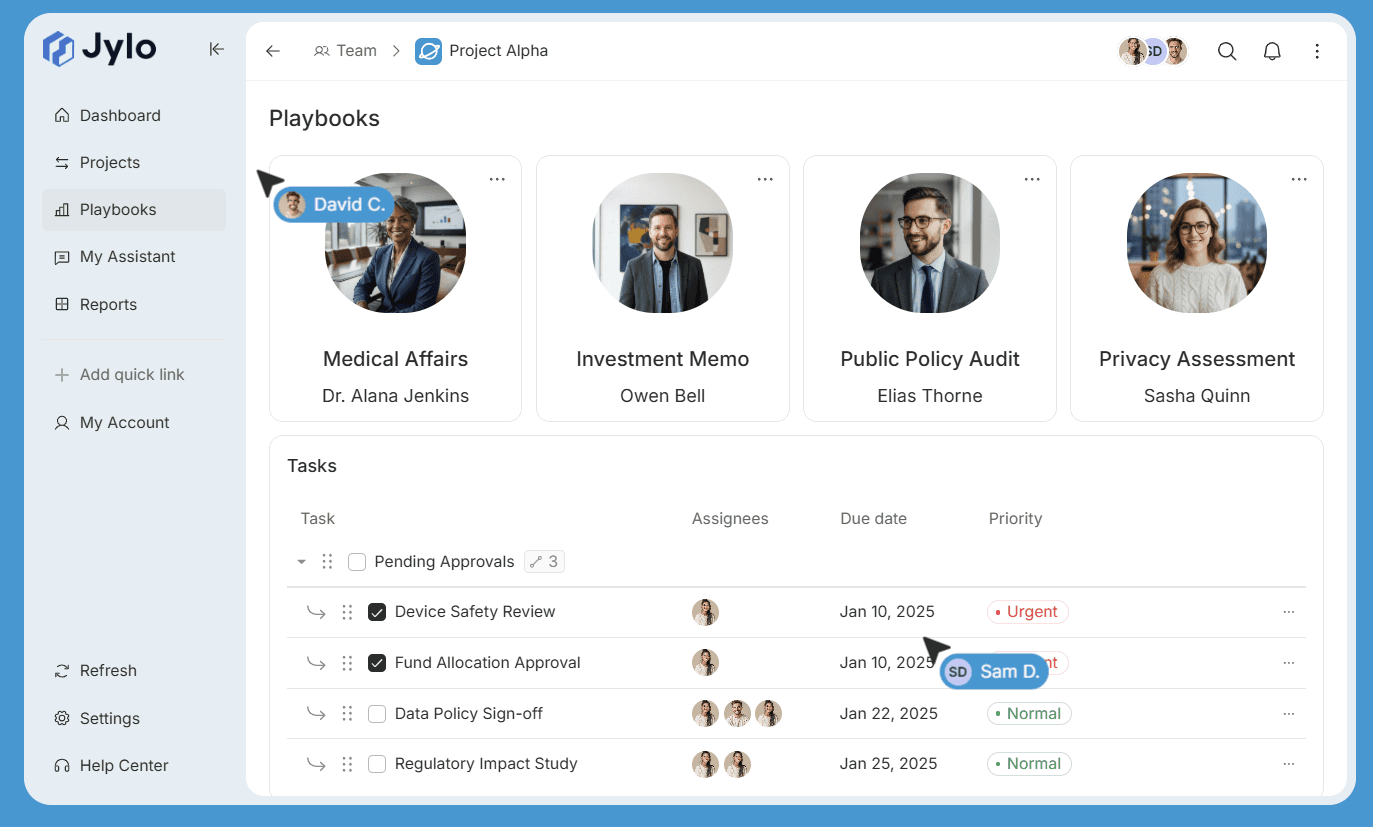

Unlike legacy RAG pipelines, where important information might be lost to fragmenting, long-context processing and embedded citation systems assure clarity. Modern AI platforms like Jylo can internally “vectorize” documents, keeping context whole while supporting granular referencing and evidence tracking. For workflow and quality assurance, this approach enhances process integrity, reliability, and audit trails, setting a new standard for LLM based document intelligence.

Conclusion

As AI advances, digital transformation strategies must evolve. Long-context LLMs with embedded citations offer organisations a leap forward - delivering deeper insights, ironclad auditability, and transparent decision-making. It's time to move beyond the limitations of chunked RAG and embrace the future of comprehensive, trustworthy document analysis.

Article written by

Sam Lansley

AI that remains yours

Capture expertise and eliminate rework across your organisation.