Reading time:

8 min

The AI Supply Chain Is Eating Itself

AI isn’t just about models. It’s a fragile supply chain where everyone is competing for data, margins, and control.

Article written by

Shawn Curran

The AI industry is often discussed in terms of model quality, benchmarks, and breakthroughs. But beneath the surface, a much more important battle is unfolding: the economics of the AI supply chain.

Who owns the data? Who owns the customer? Who captures the margin?

Right now, the answers to those questions are unstable. And that instability is reshaping how frontier model providers, hyperscalers, wrappers, professional services firms, and end customers behave.

From Consumers to Enterprises: How Data Really Flows

Most leading AI companies today still rely heavily on consumer adoption. ChatGPT is the clearest example: hundreds of millions of individuals use it daily. But many of those individuals are not “just” consumers. They are employees using AI on behalf of their companies - often informally, through shadow IT.

This matters because it creates a quiet but massive data pipeline from enterprises into consumer-facing AI systems.

Anthropic has been unusually transparent about this dynamic. Its privacy policy makes clear that models require ongoing inputs to improve. In other words: continuous access to real-world usage data is fundamental to sustaining model quality.

At the same time, Anthropic has made a strong push into enterprise, including high-profile moves like its legal AI integrations. These tools sit not at the level of law firms or legal tech vendors, but at the very top of the value chain: the clients of law firms. The “golden goose.”

That raises a fundamental question.

Why would a company whose revenue is largely API-driven - serving wrappers and downstream developers - try to move upstream and compete with its own customers?

And why double down on enterprise, where contracts explicitly prohibit using customer data for training?

The Anthropic Paradox

Roughly speaking, most frontier model companies still depend on APIs and developer ecosystems for revenue. These are their first- and second-degree customers: wrappers, platforms, and internal enterprise builders.

By going directly after enterprise end-users, they risk cannibalising those relationships.

After publicly distancing itself from OpenAI’s advertising model, Anthropic has also limited its monetisation options. Ads are off the table. Consumer-scale data capture is weaker. Enterprise data is locked away.

So what is the strategy?

From the outside, it increasingly looks like a bet on moving further up the stack: sacrificing early ecosystem partners to capture “third-degree” customers directly.

But that is a dangerous play. It shrinks the ecosystem that made growth possible in the first place.

Mapping the AI Value Chain in Legal Services

To understand this dynamic, it helps to map the stack. Using legal as an example:

Internet → Frontier Model Vendor → Hyperscaler → Wrapper → White Collar Service Seller → White Collar Service Buyer

Each layer faces its own pressures.

1. The Internet

More content is moving behind paywalls. High-quality proprietary data is increasingly closed.

That means models trained primarily on open web data will become stale over time. They will be exposed less and less to emerging ideas, niche expertise, and real professional workflows.

2. Frontier Model Vendors

These companies face brutal economics:

Massive capital requirements

Rapid commoditisation

Little product differentiation

Persistent losses

Growing competitive pressure

With models converging in capability, pricing power erodes. This pushes vendors toward diversification: enterprise deals, vertical apps, advertising, and direct distribution.

But each of these moves risks alienating partners.

3. Hyperscalers

AWS, Microsoft, and Google sit underneath everything. They host the models, sell compute, and resell tokens.

They often earn reliable margins without owning the models themselves (except for Google).

In many ways, they are the quiet winners of the current structure.

4. Wrappers

Wrappers build interfaces, workflows, and vertical solutions on top of models.

But structurally, they have weak moats:

No control over core technology

Supplier can compete directly

Pricing pressure from both sides

Many aim for high gross margins, often marking up tokens substantially. As usage scales, those margins become very visible to customers.

5. White Collar Service Sellers

These are law firms, consultancies, and professional services providers.

They face hard choices:

Build in-house

Rely on wrappers

Go direct to model vendors

Wrappers targeting 70–80% gross margins increasingly look expensive at scale. Over time, that markup becomes a major cost line.

But going direct raises another issue: what is the long-term business model of frontier model providers if they cannot monetise data and cannot sustain premium pricing?

6. White Collar Service Buyers

Corporate clients increasingly ask:

Why not cut out the middle?

Why pay law firms and wrappers when we can license tools and connect directly to models?

If this trend accelerates, it could hollow out much of today’s professional services and AI tooling ecosystem.

A System Under Stress

Put simply: every layer is trying to move into every other layer.

Model providers are becoming application companies. Some wrappers want to share value with their end customers. Service firms want technology platforms. Buyers want to internalise everything.

This creates constant conflict.

And it makes the system fragile.

Possible Industry Outcomes

Several consolidation paths are emerging.

1. Hyperscalers Acquire Model Providers

This seems increasingly likely.

As venture funding tightens and training costs rise, independence becomes harder to sustain. Hyperscalers have the balance sheets and infrastructure.

In this world:

Anthropic aligns with Amazon

OpenAI aligns with Microsoft

Others fold into Google or AWS

These companies have decades of experience balancing platform competition with ecosystem support. Think SharePoint, Windows, and CRM dynamics.

They know how to compete at the app layer without destroying partners.

2. Wrappers and Service Firms Merge

Some legal and professional AI vendors are exploring revenue-sharing and deeper integration with service providers.

But buyers are unlikely to accept permanent fee-sharing.

Wrappers lack strong moats. There will always be alternatives. And most wrappers can diversify across multiple model suppliers.

3. Vertical Platform Rollups

In sectors like healthcare, finance, and compliance, we may see vertically integrated AI platforms combining models, workflows, and service layers under one brand.

These firms try to own the entire stack for specific industries.

4. Open and Self-Hosted Ecosystems

As costs scale, more enterprises will license software and host it themselves on Azure or AWS.

They will pay:

Fixed fees for interfaces and workflows

Direct hyperscaler pricing for inference

Token margins disappear. Volume discounts dominate.

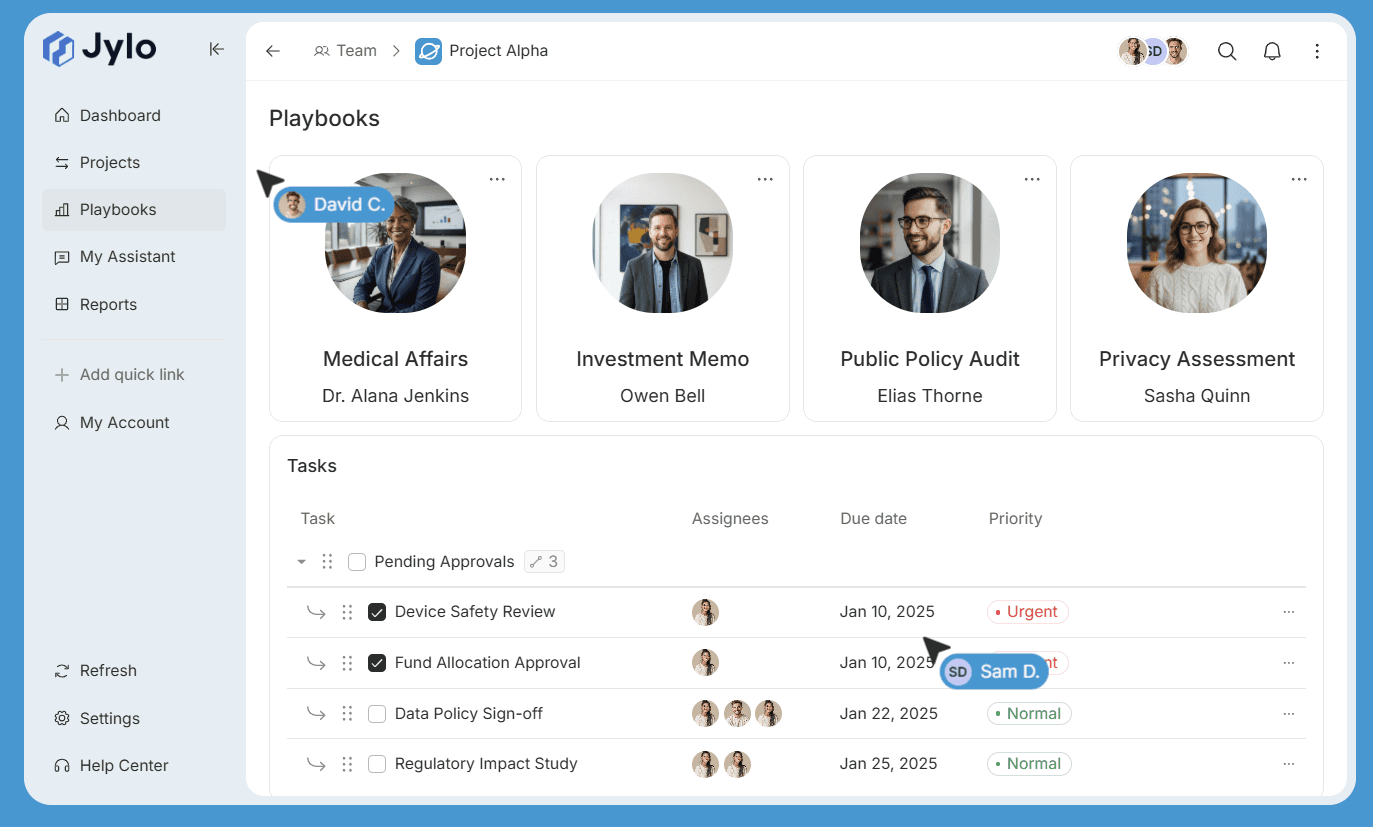

Jylo’s View

At Jylo, we see this playing out in a fairly predictable way.

When venture capital dries up, hyperscalers will consolidate frontier model providers.

OpenAI becomes Microsoft-native. Anthropic becomes Amazon-native.

These platforms will continue competing with their own products (like Copilot) while still supporting wrappers, service sellers, and enterprise builders.

As AI usage grows - and as continuous inference becomes standard - margins will matter more than hype.

Professional services firms and buyers will increasingly:

License tools

Host internally

Control their infrastructure

Minimise token markups

This is economically rational.

The Knife Edge of Knowledge Work

We are at a turning point.

If every layer keeps trying to eat the next one up the stack, the ecosystem becomes unstable. Trust erodes. Partnerships collapse. Innovation slows.

We have already seen how aggressive moves - like Anthropic’s recent positioning on their plugin - can unsettle the market.

Ironically, nobody really wins in that scenario.

Not model providers. Not wrappers. Not service firms. Not customers.

The healthiest outcome is one where:

Each layer specialises

Margins are transparent

Data rights are respected

Infrastructure remains neutral

Everyone “eats their own lunch” instead of trying to steal someone else’s.

Article written by

Shawn Curran

AI that remains yours

Capture expertise and eliminate rework across your organisation.